Chatbot: Generating UI on the fly

April 28, 2024 •5minI've been experimenting with Vercel AI SDK for the last couple of weeks and tried to come up with something that both, helps me get familiar with AI-related technologies and consists of different functionalities like calling API endpoints and filling the pre-generated components with the received information. Yes, even though the caption says "generating UI on the fly", it's not what this project does. There are actually examples of on-the-fly UI generation like V0 by Jared Palmer on Vercel ↗, however, it's not something to achieve this easily as you may imagine.

Vercel's AI SDK ↗ provides multiple options for interacting with AI tools and LLMs like GPT. For simple chatbot-like projects that serve plain text through the AI stream, it's probably a better and less complicated option to go for completion API routes and receive the stream from LLM providers like OpenAI but generative UI is totally different approach. Let me break it down for you with the knowledge I gained over the last couple of weeks.

First of all, generating UI elements requires updating your AI model's current state with the UI state to have stability in the communication between your model and your application. What this means is that you simply make your model aware of what is happening in your app. This will help us make our model aware of what the user is doing with the served components later. Since the UI components we serve in our application through the AI stream are going to need data to be displayed, it would've been quite painful to pass that data down to our components. Fortunately, Vercel provides a solution. Following is a function that helps us create a provider that we wrap our app with so that we can access AI and UI state from anywhere in our app.

Initialising AI provider

Simply provide initial values with actions that handle UI generation. See Vercel's repo for detailed execution on initial values here ↗. AI SDK also provides functions through the API to help with embedding UI elements into the AI stream under AI functions and tools like: render, streamUI, yield etc. and generating a simple UI simply requires the following:

- Pre-prompting the LLM with the

systemrole. - Type-safe function parameters with a description for LLM to understand its use case.

- Function body through generate method (parameters could be received as props)

- An update to the aiState that comes from getMutableAIState through the API.

A simple function for LLM to call

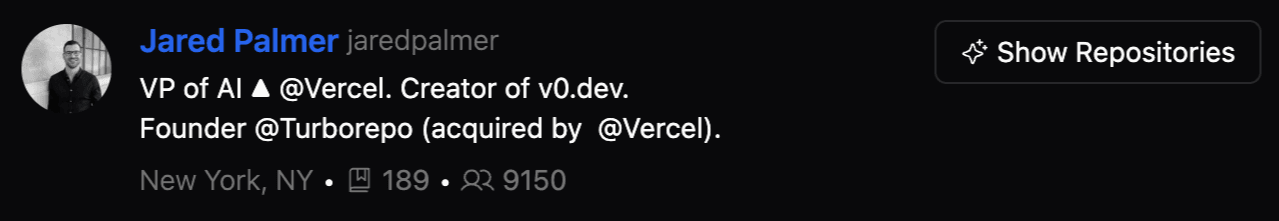

The code above utilises the described steps and creates a UI element that displays a user's GitHub profile. yield() is a function that helps us make the experience more user-friendly by simply yielding a skeleton of the UI element that LLM is filling information with when the function is called and waiting for a response. Once the component is filled with the information wherever it comes from, the yielded element is removed from the DOM and replaced with the actual component.

Note: The code above does not reflect a comprehensive usage.

The above code simply injects the following component into the stream.

Interacting with the LLM-generated UI elements requires extra functionality and state updates, meaning, starting from the generation of the element, every time the user interacts with it, the state should be updated so that the LLM is aware of what is going on in the UI. The above component serves a single action that triggers another function call through the LLM and injects other components into the stream. This is done by another action.

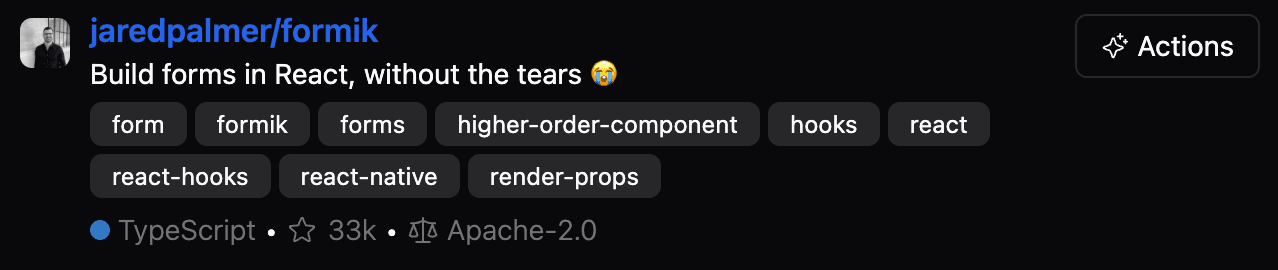

The server action to get the repositories of the user

There are probably more efficient ways of calling actions but this one is simple enough to inject another UI element into the stream and update AI state and knowledge on what is going on in the UI. .update() and .done() methods are used to update the process of a UI element if you'd like to change the component's inner structure while fetching data or while the user is interacting with it. The usage of this is as follows.

Repository action call

What we are doing here is simply calling our function, passing the existing data that we used in the previously rendered component and setting its content as a new message into our chat. The resulting component is something like this:

This project actually handles a few more actions that are not so complicated. Since I was trying to understand how all these work under the hood, I decided to make only GitHub-related actions through the GitHub API. However, there is literally no limit to what you can do with it in terms of UI generation. It all depends on your creativity and coding skills.

ImprovementsSince this is a simple project with not so many actions, I didn't use an agent structure to filter out data or make a single agent do a niche job at a time to reduce the amount of problems that might occur but I am planning to use agents on future projects with more complicated structures.

Please beware that I am no expert on either coding or any AI-related technologies so there might be problems both in my code and in the examples I listed above. Vercel AI SDK is a hot repo and receives patches quite often so my code might not be up-to-date as well.

If you'd like to check out my code, see my repo here ↗

There is also another hot repo utilising AI SDK, Morphic by Miurla. I recommend you check that out too here ↗

Cheers!